- Home

- Discover

- Your applications

- Case studies

- Case Study: iCub Robot using CURRERA R to navigate

Project description

Period:

February 2013 – September 2013

Name:

Usage of computer vision and humanoid robotics to create autonomous robots

Project focus:

Practical goal of the project is to “teach” a specialized humanoid robot, the iCub robot, to solve any puzzle, wherein a ball of a given color would be placed at the ‘start’ position of the maze, and the robot would navigate the ball through obstacles and get the ball to the ‘finish’ position.

The robot would be able to move the ball through the maze by physically tilting the base of the puzzle with its hand. In the process, the robot would utilize the most efficient way possible. If no possible path exists, the robot would not begin to solve the maze.

The importance of this experiment is profound. This experiment marks the beginnings of letting a robot truly “think” that its actions have an impact on its immediate surroundings.

When a child is born, initially it is not able to comprehend objects, shapes, etc. and cannot focus on anything that is not within its direct gaze.

However, over time, the child learns that it can move and play with objects, thus realizing that its motor hand movements have changed the environment. The child learns this from neurological feedback loops like the rattling of toys.

Method

Who was behind the project:

- Vishnu Nath (Principal Investigator) – Vishnu Nath is currently working as a software engineer at Microsoft Corporation. He completed his MS and BS degrees from the University of Illinois at Urbana-Champaign. Vishnu has authored two books, and is the primary author of several research papers that have been presented at major academic conferences. Vishnu has also received various international awards and is also the member of several academic honor societies. He is the principal investigator in this project, and this project was done as part of his MS thesis.

- Dr. Stephen Levinson (Vishnu’s MS thesis advisor) – From 1976 to 1997, he worked at AT&T Bell Laboratories, from 1990 to 1997, as head of the Linguistics and Research Department. He is a professor in the University of Illinois, Department of Electrical and Computer Engineering and a full-time Beckman Institute faculty member in the Artificial Intelligence Group.

Current Liaison between Ximea and UIUC:

- Onyeama Osugwu - He is pursuing an M.S. in Applied Mathematics, and an M.S. and Ph.D. in Electrical and Computer Engineering at the University of Illinois at Urbana-Champaign. He is currently working at the Beckman Institute under the advisement of Professor Stephen Levinson, and was a research colleague of Vishnu.

Project Components:

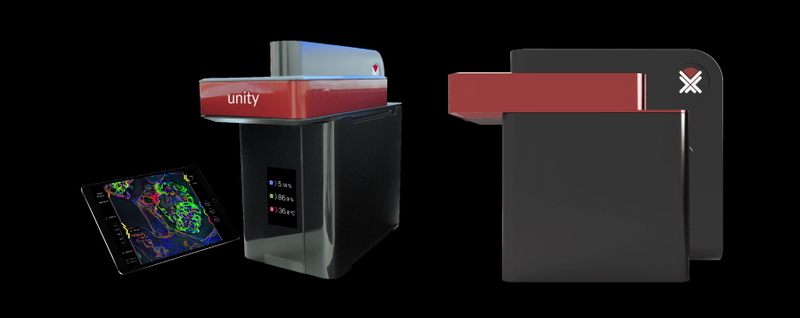

For the successful completion of the project, the most important components were the iCub robot itself and the CURRERA Starter Kit (with CURRERA RL04C camera).

The starter kit also came with demo applications of a variety of computer vision libraries. The computer vision library that was utilized for this experiment was the OpenCV library.

All the vision related processing was performed by the CURRERA camera. This includes the determination of all the internal and external edges of the maze and the initial position of the ball.

This information was then transmitted to the iCub to store it in its RAM, freeing up the CURRERA camera to deal exclusively with the live camera feed and its processing.

CURRERA unit is responsible for detecting the edge markers, the centroid of the ball and its current relative position at all times with respect to the markers.

Further important tasks also included filtering out other possible candidates for being the ball (possible with objects having similar HSV values) and for making sure that the entire board is visible at all times.

These are done by the process of inverse homography and the entire computation is done within the CURRERA unit itself. Only the output is sent to the iCub for further processing.

We believe that the CURRERA unit was able to handle such loads seamlessly because of the Atom Z530 process and the 1GB RAM that it had, making it a powerful computer in its own right.

Camera Substitution

The iCub comes with two cameras of its own. However, they are CCD based while the CURRERA is CMOS based. These cameras are always prone to noise and slow speeds.

Due to these performance reasons, the original camera of the iCub was removed and was replaced with the CURRERA camera.

Base of the skull of the iCub contains the PC104, which covers the entire interior of the skull. As a result, the camera is not visible from the back of the robot, but can be seen as the iris of an eye from the front. All the wires from the BOB144 have been passed through the neck into the body and are connected to the iCub’s control systems so that the loop is maintained.

Identifying the ball

The first thing that is needed for the entire project to run is to determine what object(s) can be classified as the ball in question. Since the ball was going to be red in color, it seemed natural to program the CURRERA module to look for red objects, i.e. objects whose R value is high in the RGB value.

The following figure gives an indication of the range of values seen by the XIMEA CURRERA camera.

As a result of the small variation in the RGB values, the smart camera was programmed to look at the HSV values instead. The results were much more promising.

The camera accurately detected four corners of the maze, and could detect the ball easily. The corner detection and the resulting inverse homography are shown in the next 2 figures.

This represents almost complete information the camera outputs to the iCub for further processing.

Until this point, CURRERA has already performed a significant amount of computer vision processing. The camera has detected the four edges of the board, the internal paths of the board, the relative position of the ball and performed an inverse homography in real time.

Yet there is something even more amazing that CURRERA can perform that made it perfect for this project. It also able to segment the image and its path (based on the HSV values), so that the iCub can generate the optimum path right away.

Furthermore, it can also detect the start and end points of the maze as well, based on the tags attached to the maze. The following figures show the true computing capability of the CURRERA camera.

Video 1 - http://www.youtube.com/watch?v=78u8FkVc3Jc

Video 2 - http://www.youtube.com/watch?v=j7DdGSOog1g

Media and Public Recognition

As part of the experiment and the implications for the field of AI, Vishnu Kamalnath was awarded the Hind Rattan Award, 2014, by the Government of India.

The works were also mentioned in American newspapers like the Daily Illini (URL: http://www.dailyillini.com/news/article_8d56858c-62cc-11e3-a8d9-0019bb30f31a.html)

and the News Gazette.

Furthermore, related work is also being performed at various labs all over the European Union.

Acknowledgements

Kamalnath would also like to thank his advisors, Dr. Stephen Levinson and Dr. Paris Smaragdis for their support and technical advice that made this project a reality.

More about the product HERE

Study can be downloaded HERE

Contact info: Vishnu Nath Kamalnath and OnyeamaOsuagwu, from Beckman Institute of Illinois University

Related articles

Latest articles

Accelerate edge intelligence with XIMEA’s ultra-low latency PCIe cameras. Stop by for live demos and tech deep-dives with our team at Booth #3231

Visit us at booth #1026

Visit XIMEA at booth #0A095 to get hands-on with cutting-edge camera tech built for flexible payloads, mapping, and more.

Join us at booth #3138!