The 4th generation of SONY CMOS image sensors called Pregius S consists of several interesting models:

IMX530, IMX531, IMX532, IMX535, IMX536, IMX537

IMX540, IMX541, IMX542, IMX545, IMX546, IMX547

Among many remarkable qualities, the IMX53x model versions are offering HDR mode.

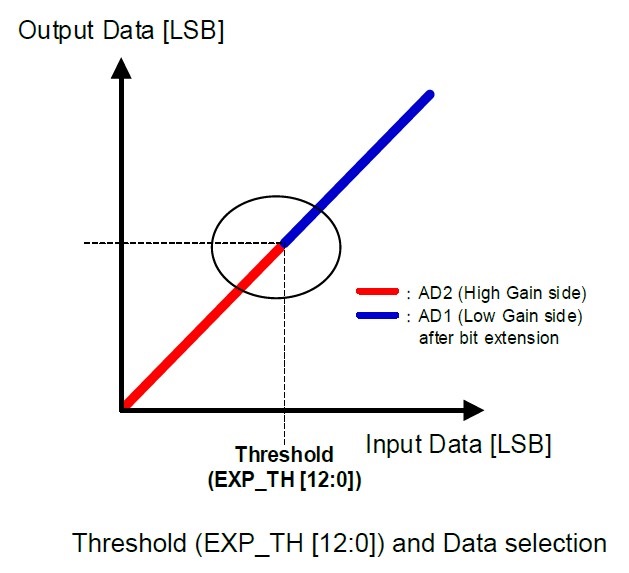

The feature which allows it is named "Dual ADC".

It creates two RAW frames from each 12-bit raw image that are digitized through two ADCs with different analog gains.

If the ratio of these gains is around 24 dB, the result can be a single 16-bit raw image compiled from two 12-bit raw frames with different gains.

The main idea of the HDR mode is - to get an extended dynamic range by merging two frames with a lower bitrate and the same exposure.

This method guarantees that both frames have been exposed at the same time and are not spatially shifted.

The Dual ADC feature was originally introduced in the 3rd generation of SONY CMOS Pregius image sensors, but HDR processing had to be implemented outside the sensor.

The latest version of that HDR feature is done inside the image sensor which makes it more convenient to work with.

Dual ADC mode with on-sensor combination (combined mode) is applicable only for high speed versions of the sensors - starting with IMX53x.

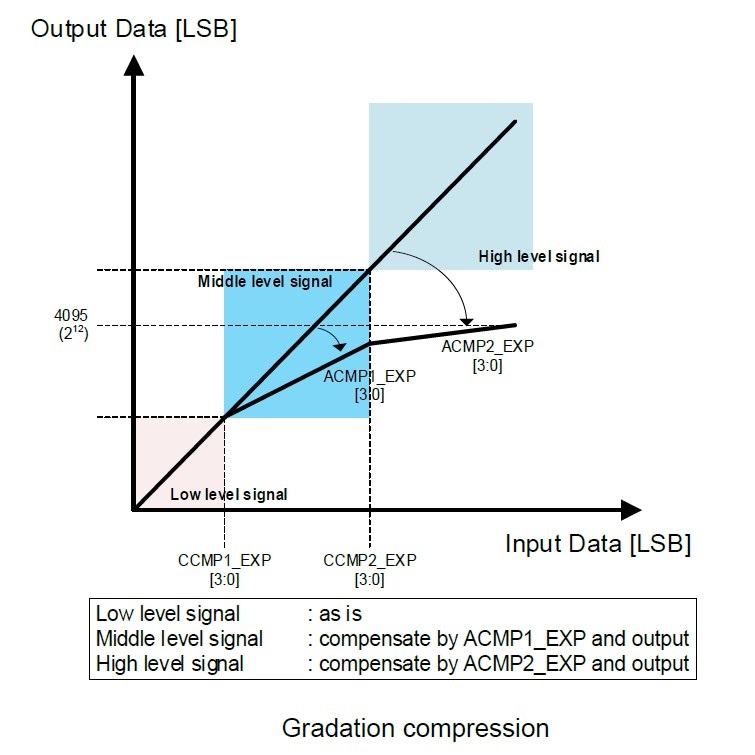

Below is a picture with detailed info concerning the PWL curve (piecewise linear function) which is applied after the image merge, and it is done inside the image sensor.

It shows how gradation compression is implemented at the image sensor.

Two pairs of knee points for PWL curve - gradation compression from 16-bit range to 12-bit.

They actually come from Low gain and High gain values, and from parameters of gradation compression.

This is an example of real parameters for Dual ADC mode of SONY IMX532 image sensor:

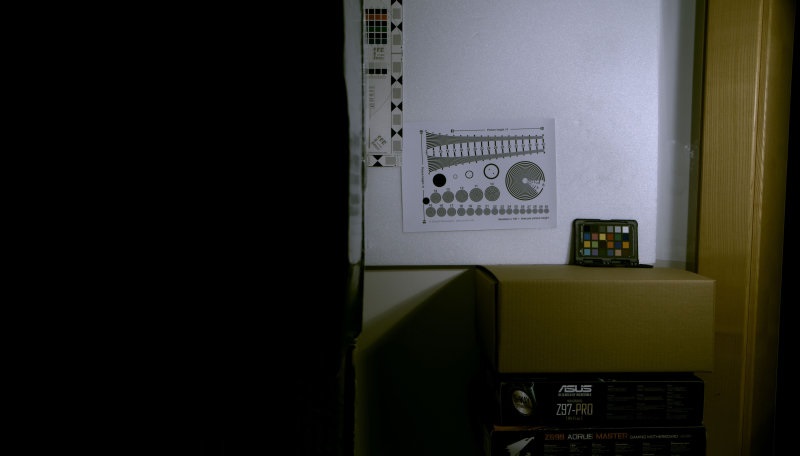

The captured frames/images used in later examples are from the IMX532 image sensor integrated into the:

XIMEA camera MC161CG-SY-UB-HDR

It offers those exact same parameters of Dual ADC mode.

A comparison of images with a gain ratio of 16 (High gain is 16 times greater than Low gain) and exposure ratio of 1/16 (long exposure for Low gain and short exposure for High gain) shows that they are alike.

However, High gain image exhibits higher noise and more hot pixels due to strong analog signal amplification.

Apart from the standard Dual ADC combined mode, there is a popular approach that could bring good results with minimum effort: using just the Low gain image and applying custom tone mapping instead of PWL curve.

In that case, the dynamic range is lower, but the image can have lower noise compared to images from the combined mode.

It makes sense to improve the results achievable by the on-sensor HDR processing in Dual ADC mode.

This could be done by implementing more sophisticated algorithms for image merge and tone mapping.

GPU-based processing is usually very fast, so it could still be able to process image series with HDR support in real-time.

The realtime aspect is highly important for many camera applications.

A new image processing pipeline was implemented that supports the use of NVIDIA GPU to get Dual ADC frames from SONY Pregius image sensors.

It allows to process on NVIDIA GPU any frames from SONY image sensors in the HDR mode: one 12-bit HDR raw image (combined mode) or two 12-bit raw frames (non-combined mode).

The result could be better not only because of its merging and tone mapping procedures, but also due to high quality debayering that also influences the quality of processed images.

Why the GPU modules are used ? It brings much higher performance and image quality in contrast to results provided by the CPU.

Below are several examples of pipelines that can be used for various ways of image processing.

Low gain image processing is the simplest method that is widely accepted and it's actually the same as a switched-off Dual ADC mode.

Low gain 12-bit raw image has less dynamic range, but also less noise.

In this case, it is possible to apply either 1D LUT or a more complicated tone mapping algorithm to get better results than those from the combined 12-bit HDR image - which are obtainable directly from the SONY image sensor.

This is a brief Low gain image processing pipeline:

Fig.1. Low gain image processing for IMX532

While SONY sensor provides ready to use 12-bit raw HDR image with their Dual ADC mode, there is still a way to improve the image quality.

For this purpose, a custom tone mapping can be applied.

The analyzed results proved to be consistently better.

This is a brief Combined mode image processing pipeline:

Fig.2. SONY Dual ADC combined mode image processing for IMX532 with a custom tone mapping

To get both RAW frames from SONY image sensor, they need to be sent to the PC via the camera interface.

This could present a bottleneck if the camera's interface bandwidth is not high enough.

Some camera models could require to decrease the frame rate to cope with bandwidth limitations.

If the camera uses PCIe (PCI Express) interface it could be possible to send both raw images in real time without frame drops.

As soon as two raw frames (Low gain and High gain) arrive preprocessing has to start.

Then follows their merge into a single 16-bit linear image and the pipeline continues with the tone mapping algorithm right after that.

Typically, good tone mapping algorithms are more complicated than just a PWL curve.

So, ever better results can be achieved, but the whole operation will take more time.

To finish these tasks in the fastest way, high performance GPU-based image processing could be the best approach.

It can also bring better image quality and higher dynamic range compared to Combined HDR mode from SONY or Low gain image processing.

Here is the HDR workflow GPU pipeline:

In this workflow, the most important parts are: merge, global/local tone mapping and demosaicing.

This image processing pipeline can be implemented with Fastvideo SDK.

It is optimized for use with NVIDIA GPU and can run very fast.

Fig.3. SONY Dual ADC non-combined (two-image) processing for IMX532

The benefits of using Dual ADC mode on GPU are following:

After initial testing it appears that the best results for image quality could be reached in the following modes:

1: Simultaneous processing of two 12-bit raw images in the non-combined mode.

2: Processing of one 12-bit raw frame in the combined mode with a custom tone mapping algorithm.

In the case of the non-combined mode, the processing time and interface bandwidth limitation could present a problem.

In the case of the combined mode, image quality is comparable, the processing pipeline is less complicated (the performance is better), and less bandwidth is needed - thus, it could be recommended for most use cases.

In general, with a proper GPU, image processing could be done in realtime at the maximum Fps.

The above pipelines are applicable to all high speed SONY Pregius S image sensors of the 4th generation which offer Dual ADC combined mode.

The measurements for kernel times below were done both on Jetson AGX Xavier and GeForce RTX 2080TI in the combined mode.

These GPU modules can provide a high dynamic range and very good image quality, so the knowledge about their performance is valuable.

This table represents time values for the main universal image processing modules since the full pipeline could vary in different application cases.

| Algorithm \ GPU | Jetson AGX Xavier | GeForce RTX 2080TI |

| Preprocessing | 3.6 ms | 0.71 ms |

| MG Debayer | 11.1 ms | 1.46 ms |

| Color space conversion | 2.0 ms | 0.43 ms |

| Global tone mapping | 2.6 ms | 0.50 ms |

Table 1. Kernel time in ms for a camera with Sony IMX532 raw frame processing in the combined mode (5328×3040, bayer, 12-bit)

Credentials

Fastvideo Blog:

https://www.fastcompression.com/blog/gpu-hdr-processing-sony-pregius-image-sensors.htm