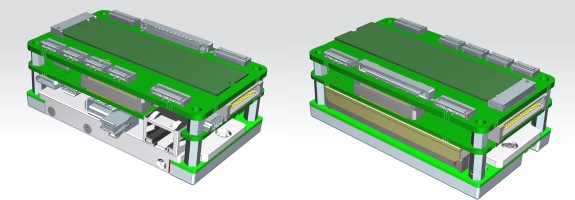

Fig.1. Multicamera system on Jetson TX2

Realtime remote control of any vision system has become an important part of many applications and especially UAV or ROV types of Autonomous vehicles.

Moreover, such drones or mobile robots which should be remotely controlled through a wireless network are often equipped with multi-camera systems.

To enable these applications their vision setups require minimum latency to ensure smooth video transfer and instant feedback.

This complicates the task and here is where NVIDIA Jetson multiplexing several cameras can help.

Low delay video streaming is a common necessity of many applications with teleoperation scenarios like telesurgery, virtual or augmented reality.

Some of them are using dynamic processes of computer vision algorithms and neural networks which are applied to video in realtime.

To evaluate the latency and the performance of such systems, it is essential to measure the glass-to-glass (G2G) delay for video acquisition and video transmission.

Glass-to-glass video latency, also called end-to-end (E2E) video delay, is indicating the period of time from when the photons of a visible event pass through the lens glass of the camera till the moment when the final image is delivered to the glass of the monitor.

Fig.2. Low latency video processing

The G2G measurements are non-intrusive and can be applied to a wide range of imaging systems.

While each camera has a fixed frame rate, producing new images in constant time intervals the real world events are never synchronized to camera frame rate and this is how live realtime measurements can be made since real world events have to be triggered.

That is why the obtained G2G delay values are non-deterministic.

The idea of a simple glass-to-glass test is to show on the monitor two time values - the current time and the time of capture, described below is the process of how it can be done and components used.

NVIDIA Jetson TX2 SoM (System-on-Module):

RTSP streams from cameras can be received by using VLC application on one of the devices such as MacBook with MacOS, desktop or laptop with Windows or Linux OS.

The final result would be to see at the same monitor two numbers: one with the current time and the other with the time of capture.

The difference between them is the latency of the imaging system.

To connect cameras and other peripherals to Jetson TX2 a special carrier board is needed.

Described below is a proprietary carrier board (xEC2) designed by XIMEA to support multiple cameras.

Fig.3. Jetson carrier board from XIMEA

Several various slots enable to attach up to eight different XIMEA cameras to just one Jetson TX2:

Fig.4. Fastvideo SDK for realtime image and video processing on NVIDIA GPU

These are benchmarks for two XIMEA camera models MX031CG-SY-X2G2 and the receiving desktop station with NVIDIA Quadro P2000 GPU over Wi-Fi.

The below benchmarks for G2G include video processing and video transmission.

The presented method offers relatively reasonable values which depend on the complexity of the video processing pipeline.

There are more precise methods for latency measurements which are utilizing light-emitting diode (LED) as a light source and a phototransistor as a light detector.

Such a setup includes the blinking LED in the field of view of the camera as a signal generator and recording of the photoelectric sensor where the LED is shown on the display.

The LED triggers an oscilloscope which also records the signals from the photoelectric sensor and that allows to receive the G2G delay.

The analysis of the data could be done automatically with a microcontroller board.

Credentials

Fastvideo Blog:

https://www.fastcompression.com/blog/low-latency-h264-streaming-from-jetson-tx2.htm