Remote Reality Teleoperation Project

With the technological advancement of wireless communication (5G networks), visualization technologies (Virtual Reality), control units and sensors, it becomes possible to train machine operators in a virtual environment as well as teleoperate into remote locations.The Robotic Systems Lab from ETH Zurich is working on a project where the goal is to deliver as precise and real experience to the excavator operator, as possible.

The Operator will have all relevant information in the same form as if he was in the cockpit of an excavator.

Furthermore, he/she can receive additional semantic data, which are not available in the excavator, thus making his/her remote work more convenient.

Environment and showcase

Some of the first intended use cases are trade shows presentations and crane operator training in special laboratories.Trade fares have specific conditions similar to an outdoor environment with changing sunlight and variable humidity.

Menzi Muck AG, the walking excavator manufacturer, in collaboration with ETH Zürich, have demonstrated such remotely operated excavator at Bauma Fair 2019.

The showcase consisted of Menzi Muck simulator set for training of operators (utilizing VR goggles) connected to an excavator located in a different city.

The cameras are using low-latency image processing solution provided from MRTech and running on NVIDIA Jetson TX2 platform.

In the following video, the excavator is located in Zürich ETH Hönggerberg Campus and equipped with industrial wifi and 4G-5G router for streaming and controlling.

Since it is an outdoor environment the exposure of the cameras is dynamically updated.

To examine the components utilized for such complex application it was split into several sections covering also reasons for particular solutions

HEAP - Hydraulic excavator for an autonomous purpose

The excavator role was played by HEAP, a customized Menzi Muck M545 Excavator developed for autonomous use cases as well as advanced teleoperation.The machine has novel force-controllable hydraulic cylinders in the chassis that allow it to adapt to any terrain.

Additionally, HEAP is equipped with sensors necessary for autonomous operation, i.e. LIDARs, IMU, GNSS and joint sensing.

The primary autonomous use is robotic landscaping/excavation investigated in National Centre of Competence in Research (NCCR) Digital Fabrication or dfab.

Sensorized custom Menzi Muck M545 robot called HEAP

Embedded Remote Reality streaming

The ETH team developed an actuated stereo video streaming setup in cooperation with MRTech who are the official System Integrator of XIMEA cameras.The collaboration with MRTech originated from the strive to achieve full resolution streaming from the cameras using the following Hardware components:

- Nvidia Jetson TX2 + carrier board

- 2x PCIe xiX camera with 2064 x 1544, 1/1.8” and 122 fps (218 Fps max)

- Theia MY125M Ultra Wide angle lenses (optically corrected fisheye~150°)

- Gremsy T3 brushless pan-tilt-roll unit coupled with HTC Vive

- Arduino nano to apply HW trigger on cameras

This setup runs on Ubuntu 16.04 and employs the GPU accelerated image processing and video compression (H.264 currently) on the stereo video stream.

Currently, the achievable resolution is 1416 x 1416 @40 fps or 80 fps with interleaved event rendering - see the HW trigger graph in below figure.

The resolution and framerate can be improved further by replacing the camera model and especially upgrading Jetson to AGX Xavier version in the future.

The Gremsy gimbal is normally used for stabilized imaging but ETH converted it into a position controlled slave robot to mimic the head motions of human.

For slow head motions, the reaction of the Gremsy is fine but for fast movement, the human person can perceive the latency.

According to the experience so far, the teleoperator is not moving his/her head too frequently nor fast, but a custom gimbal can be designed to improve this issue.

Image processing pipeline at the teleoperator side

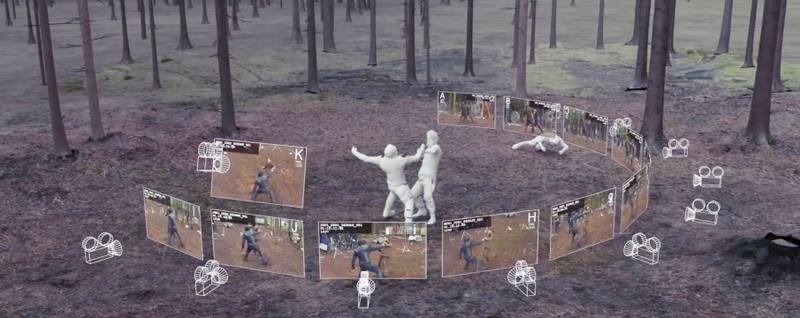

End-to-end remote reality streaming setup

Multi-camera embedded system

MRTech has developed a modular multi-platform framework for image processing and machine vision applications focused on high Dynamic range and lowest latency.For this particular project the following GPU-accelerated processing pipeline is implemented on Nvidia Jetson TX2 platform with carrier board used for interconnection.

Switch to AGX Xavier will bring even higher bandwidth processing power and increase in Fps speed.

Current pipeline includes these steps:

- 8, 10 and 12 bit RAW image acquisition from XIMEA camera

- black level subtraction

- auto white-balance

- high quality demosaicing (debayer)

- gamma correction

- conversion to YUV format

- H.264 / H.265 video encoding

- RTSP streaming

Steps 2-6 are implemented using high performance Fastvideo SDK that runs on GPU.

Additionally, processing applies asynchronous auto-exposure algorithm to adapt the camera settings for varying lighting conditions.

Finally, when the 2x MX031CG cameras are acquiring simultaneously (with hardware synchronization) the system adds 15 ms latency for above processing steps.

Total Glass-to-glass latency should not exceed 60 ms depending on the network parameters. Targeted communication environment is a 4G/5G network.

IBEX Motion Platform

Another part of the setup is IBEX which has been designed for HEAP in order to provide accurate motion and visual feedback.Particularly, it is a recreation of the HEAP's cockpit that sits on top of a 3-DoF motion platform.

The movement of the excavator chassis and the movement of the platform are coupled in synchronization.

This is essential, especially due to Menzi Muck's unique walking design in which operators heavily rely on their sense of motion to estimate whether all four wheels are in contact with the ground of the terrain or not.

In the initial design of IBEX, the visual feedback is provided via three 2-D monitors that display live camera streams from three different perspectives - the front window and two side windows.

As an extension, the monitors can be replaced with a head-mounted display (HMD) using HTC Vive to completely visually immerse the excavator operator in a remote reality projection of the excavator's true site.

This allows the operator to experience the real sense of immersion, in fact, HMD actually enhances the operator's perception of three-dimensional space.

The image quality, visual acuity and field of view for the operators could even improve once the HTC Vive is replaced with XTAL, which is described below.

The following table gives the specs of the coupling

Visualization

As mentioned above the first system version was planned in combination with HTC Vive headset.For virtual reality, next step was to use the XTAL VR headset from VRgineers, which offers 180 degrees field of view with crystal clear picture.

This is provided by the combination of 2x OLED panels with total 5120x1440 resolution, running at 70 Hz.

It is connected to a computer using 1x DisplayPort 1.2, 1x USB and 1x power cord.

- OpenVR support

- NVIDIA VR works support

- testing eye tracking (30 fps)

- hand tracing via embedded Leap Motion sensor

- support for AR Tracking, Lighthouse tracking, Optitrack tracking, StarTrack tracking

- Linux support

- fast eye tracking (120 fps)

- nearside electromagnetic tracking (up to 2 meters - only suitable for seated experiences)

- front facing cameras as addon module

- Foveated rendering

Example of an operator where he is mobile around the robot

Compact Teleoperation Backpack solution

Future requirements could be based on operating the excavator remotely without the use of IBEX.Where the operator wears all the necessary equipment to teleoperate the machine, similar to the picture above.

This setup would also make sense for teleoperating smaller machines which would allow integration of the camera system.

Such a Mobile Operating Station is further considerable for semi/full-autonomous commanding using mixed reality.

Note: Needs to be tested with Ubuntu and ROS. HP has custom NVIDIA drivers for Quadro P5000

It supplies sufficient performance and reliability coming together with exchangeable batteries and desktop docking station. Further specification:

- Intel i7 7820HQ(2.9/3.9GHz 4j£dra/8vl£ken)

- 16 GB RAM DDR4

- NVIDIA Quadro P5200

- SSD 256

- Ubuntu 16.04

- SteamVR+OpenVR on linux

Summary

For more information about any part of the system described above please feel free to contact the companies mentioned below.Partners

ETH Zurich, Robotic Systems Lab (RSL)

The Robotic Systems Lab investigates the development of machines and their intelligence to operate in rough and challenging environments.With a large focus on robots with arms and legs, our research includes novel actuation methods for advanced dynamic interaction, innovative designs for increased system mobility and versatility, and new control and optimization algorithms for locomotion and manipulation. In search of clever solutions, we take inspiration from humans and animals with the goal to improve the skills and autonomy of complex robotic systems to make them applicable in various real-world scenarios.

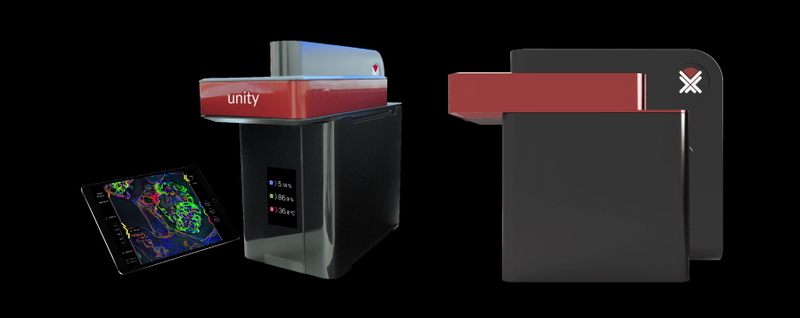

Vrgineers

Specialize in the development of virtual and mixed reality technologies. The Vrgineers are able to provide source codes of DuplexPlayer for certain projects.ETH suggests to use Vrgineers custom video player called DuplexPlayer, which is capable to play up to 16K stereoscopic video streams.

It is optimized for high resolution footage and supports offline as well as online (real-time) video.

This enables the application of any distortion/fisheye correction filters to the video signal, as well as fine tuning of the performance based on CPU/GPU approach.

MRTech

Develops cross-platform high-performance image processing software for machine vision systems.Have worked out a unique embedded platform for unmanned and remote-control systems.

One of the foundation stones is parallel computing, the basis of the pipeline for full image processing.

MRTech is a distributor, technological and VAR partner of XIMEA.

Fastvideo company is a well-known developer of GPU-based image processing SDK.

Fastvideo has implemented the first JPEG codec on CUDA, which is still the fastest on the market.

ETH Zürich, Mechanical and Process Engineering Dep.: https://rsl.ethz.ch/

MRTech s.r.o.: www.mr-technologies.com

Fastvideo LLC: www.fastcompression.com

NVIDIA link: www.nvidia.com

VRgineers: vrgineers.com

Related articles

Case study: EthoLoop - deep-learning based behavioral analysis

Case study: Aurox Unity - laser free confocal microscope

Latest articles

XIMEA at GEO WEEK 2026 in Denver

Visit XIMEA at booth #1704 for high-performance cameras optimized for aerial, mapping, and geospatial applications.

Join us at Photonics West 2026

Accelerate edge intelligence with XIMEA’s ultra-low latency PCIe cameras. Stop by for live demos and tech deep-dives with our team at Booth #3231

Meet the XIMEA team at ITC in Las Vegas

Visit us at booth #1026

XIMEA at INTERGEO 2025 in Frankfurt

Visit XIMEA at booth #0A095 to get hands-on with cutting-edge camera tech built for flexible payloads, mapping, and more.

SIGGRAPH 2025 in Vancouver